使用rook-ceph部署高可用ceph集群

使用rook-ceph部署高可用ceph集群

一、Rook介绍及安装

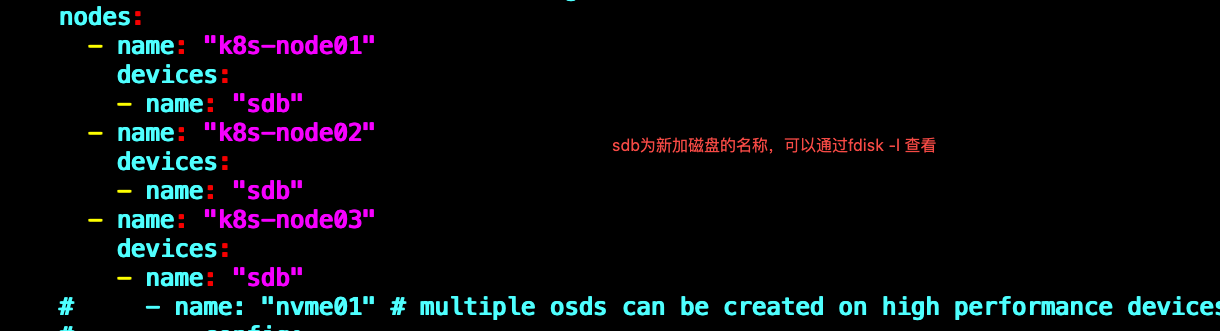

注意 1:rook 的版本大于 1.3,不要使用目录创建集群,要使用单独的裸盘进行创建,也就是创建一个新的磁盘,挂载到宿主机,不进行格式化,直接使用即可。对于的磁盘节点配置如下:

注意 2:做这个实验需要高配置,每个节点配置不能低于 2 核 4G

注意 3:k8s 1.19 以上版本,快照功能需要单独安装 snapshot 控制器

1.1 Rook介绍

Rook是一种开源的、云原生存储的编排器,可以为各种存储服务在云原生的环境中实现无缝先和,提供了所必须的平台、框架和服务;而Ceph是Rook所支持的众多存储的一种,在k8s环境中,Ceph基于Rook能够为应用提供块存储(Block Storage),对象存储(Object Storage)以及共享文件系统服务。

https://rook.io/docs/rook/v1.14/Getting-Started/quickstart/#prerequisites

1.2 下载Rook安装文件

下载指定版本Rook

$ git clone --single-branch --branch v1.14.7 https://github.com/rook/rook.git

1.3 配置更改

[root@k8s-master01 examples]# cd /root/yaml/rook/rook/deploy/examples修改Rook CSI镜像地址,原本的地址可能是gcr的进行,国内无法访问,所以我这边是通过一台香港节点的机器pull然后推送到我本地镜像仓库进行下载。

[root@k8s-master01 examples]# egrep -v "#|^$" operator.yaml

# 定义了Rook Ceph Operator 的配置参数

kind: ConfigMap

apiVersion: v1

metadata:

name: rook-ceph-operator-config

data:

# 日志级别

ROOK_LOG_LEVEL: "INFO"

# 是否允许使用循环设备

ROOK_CEPH_ALLOW_LOOP_DEVICES: "false"

# 是否使用CephFS CSI插件

ROOK_CSI_ENABLE_CEPHFS: "true"

# 是否启用RBD 插件

ROOK_CSI_ENABLE_RBD: "true"

# 是否使用NFS插件

ROOK_CSI_ENABLE_NFS: "false"

# 是否禁用CSI 驱动

ROOK_CSI_DISABLE_DRIVER: "false"

# 是否开启CSI 加密

CSI_ENABLE_ENCRYPTION: "false"

# 是否禁用持有者Pod

CSI_DISABLE_HOLDER_PODS: "true"

# CSI 供应者副本数

CSI_PROVISIONER_REPLICAS: "2"

# 是否启用CephFS 快照功能

CSI_ENABLE_CEPHFS_SNAPSHOTTER: "true"

# 是否启用NFS 快照功能

CSI_ENABLE_NFS_SNAPSHOTTER: "true"

# 是否启用RBD 快照功能

CSI_ENABLE_RBD_SNAPSHOTTER: "true"

# 是否启用卷组快照

CSI_ENABLE_VOLUME_GROUP_SNAPSHOT: "true"

# 是否强制使用 CephFS 内核客户端

CSI_FORCE_CEPHFS_KERNEL_CLIENT: "true"

# RBD CSI 插件的 FSGroup 策略

CSI_RBD_FSGROUPPOLICY: "File"

# CephFS CSI 插件的 FSGroup 策略

CSI_CEPHFS_FSGROUPPOLICY: "File"

# NFS CSI 插件的 FSGroup 策略

CSI_NFS_FSGROUPPOLICY: "File"

# 是否允许使用不受支持的 Ceph 版本

ROOK_CSI_ALLOW_UNSUPPORTED_VERSION: "false"

# 是否启用 SELinux 主机挂载

CSI_PLUGIN_ENABLE_SELINUX_HOST_MOUNT: "false"

# Ceph CSI 插件的镜像

ROOK_CSI_CEPH_IMAGE: "harbor.dujie.com/dujie/cephcsi:v3.11.0"

# CSI 节点驱动注册器镜像

ROOK_CSI_REGISTRAR_IMAGE: "harbor.dujie.com/dujie/csi-node-driver-registrar:v2.10.1"

# CSI 调整器镜像

ROOK_CSI_RESIZER_IMAGE: "harbor.dujie.com/dujie/csi-resizer:v1.10.1"

# CSI 供应者镜像

ROOK_CSI_PROVISIONER_IMAGE: "harbor.dujie.com/dujie/csi-provisioner:v4.0.1"

# CSI 快照管理器镜像

ROOK_CSI_SNAPSHOTTER_IMAGE: "harbor.dujie.com/dujie/csi-snapshotter:v7.0.2"

# CSI 附件管理器镜像

ROOK_CSI_ATTACHER_IMAGE: "harbor.dujie.com/dujie/csi-attacher:v4.5.1"

# CSI 插件的优先级类名称

CSI_PLUGIN_PRIORITY_CLASSNAME: "system-node-critical"

# CSI 供应者的优先级类名称

CSI_PROVISIONER_PRIORITY_CLASSNAME: "system-cluster-critical"

# CSI 供应者的容忍度

CSI_PROVISIONER_TOLERATIONS: |

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

- effect: NoExecute

key: node-role.kubernetes.io/etcd

operator: Exists

# 是否启用 CSI 活性检测

CSI_ENABLE_LIVENESS: "false"

# 是否监视 Operator 命名空间

ROOK_OBC_WATCH_OPERATOR_NAMESPACE: "true"

# 是否启用发现守护进程

ROOK_ENABLE_DISCOVERY_DAEMON: "true"

# Ceph 命令超时时间

ROOK_CEPH_COMMANDS_TIMEOUT_SECONDS: "15"

# 是否启用 CSI 插件

CSI_ENABLE_CSIADDONS: "false"

# 是否监视节点故障

ROOK_WATCH_FOR_NODE_FAILURE: "true"

# CSI gRPC 超时时间

CSI_GRPC_TIMEOUT_SECONDS: "150"

# 是否启用拓扑管理

CSI_ENABLE_TOPOLOGY: "false"

# 是否需要 CephFS 附件

CSI_CEPHFS_ATTACH_REQUIRED: "true"

# 是否需要 RBD 附件

CSI_RBD_ATTACH_REQUIRED: "true"

# 是否需要 NFS 附件

CSI_NFS_ATTACH_REQUIRED: "true"

# 是否禁用设备热插拔

ROOK_DISABLE_DEVICE_HOTPLUG: "false"

# 设备发现间隔

ROOK_DISCOVER_DEVICES_INTERVAL: "60m"

# Deployment 定义了 Rook Ceph Operator 的部署设置

apiVersion: apps/v1

kind: Deployment

metadata:

name: rook-ceph-operator # Deployment 的名称

labels:

operator: rook # 标签,用于标识 Operator

storage-backend: ceph # 存储后端类型

app.kubernetes.io/name: rook-ceph # 应用名称

app.kubernetes.io/instance: rook-ceph # 应用实例名称

app.kubernetes.io/component: rook-ceph-operator # 组件名称

app.kubernetes.io/part-of: rook-ceph-operator # 所属部分

spec:

selector:

matchLabels:

app: rook-ceph-operator # 选择器,用于匹配 Pod

strategy:

type: Recreate # 更新策略类型

replicas: 1 # 副本数

template:

metadata:

labels:

app: rook-ceph-operator # Pod 的标签

spec:

tolerations:

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 5

serviceAccountName: rook-ceph-system # 使用的服务账户

containers:

- name: rook-ceph-operator # 容器名称

image: harbor.dujie.com/dujie/ceph:v1.14.7 # 使用的镜像

args: ["ceph", "operator"] # 启动参数

securityContext:

runAsNonRoot: true # 非 root 用户运行

runAsUser: 2016 # 运行用户 ID

runAsGroup: 2016 # 运行用户组 ID

capabilities:

drop: ["ALL"] # 丢弃所有能力

volumeMounts:

- mountPath: /var/lib/rook # 挂载路径

name: rook-config # 挂载的卷名称

- mountPath: /etc/ceph # 挂载路径

name: default-config-dir # 挂载的卷名称

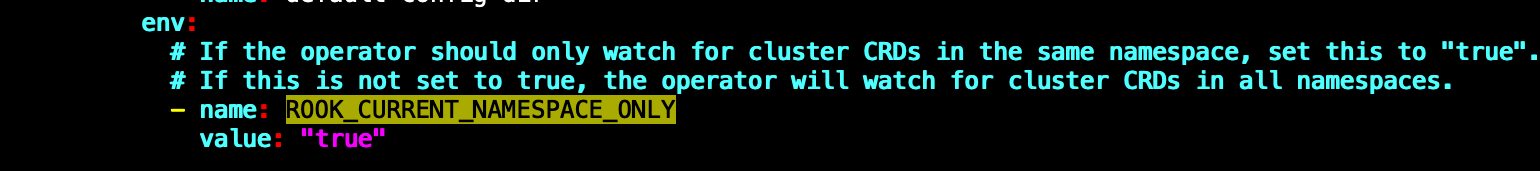

env:

- name: ROOK_CURRENT_NAMESPACE_ONLY

value: "true"

- name: ROOK_HOSTPATH_REQUIRES_PRIVILEGED

value: "false"

- name: DISCOVER_DAEMON_UDEV_BLACKLIST

value: "(?i)dm-[0-9]+,(?i)rbd[0-9]+,(?i)nbd[0-9]+"

- name: ROOK_UNREACHABLE_NODE_TOLERATION_SECONDS

value: "5"

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: rook-config

emptyDir: {} # 空目录卷

- name: default-config-dir

emptyDir: {} # 空目录卷

上面所需镜像需要自行同步,并且新版本rook默认关闭了自动发现容器的部署,可以找到ROOK_CURRENT_NAMESPACE_ONLY 改成true即可

1.4 部署rook

我这边部署的是v1.14版本,

[root@k8s-master01 examples]# cd /root/yaml/rook/rook/deploy/examples

# 执行这个命令(crds.yaml和common.yaml无需更改内容)

[root@k8s-master01 examples]# kubectl create -f crds.yaml -f common.yaml -f operator.yaml

[root@k8s-master01 rook]# kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-7c566fbc4f-64f89 1/1 Running 0 3m50s

rook-discover-4cjmw 1/1 Running 0 49m

rook-discover-6skl9 1/1 Running 0 45m

rook-discover-pxj94 1/1 Running 0 49m

rook-discover-qwqkf 1/1 Running 0 49m

rook-discover-wl4tn 1/1 Running 0 49m

全部变成1/1, Running 才可以创建Ceph集群

二、创建ceph集群

2.1 配置更改

修改cluster.yaml文件,更改osd节点,新版必须采用裸盘,即未格式化的磁盘。我这里选了3个节点,可以通过lsblk查看

[root@k8s-node01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 300M 0 part /boot

├─sda2 8:2 0 7.9G 0 part

└─sda3 8:3 0 91.9G 0 part /

sdb 8:16 0 100G 0 disk

sr0 11:0 1 1024M 0 rom storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false

...

nodes:

- name: "k8s-node01"

devices:

- name: "sdb"

- name: "k8s-node02"

devices:

- name: "sdb"

- name: "k8s-node03"

devices:

- name: "sdb"

....

2.2 创建Ceph集群

[root@k8s-master01 examples]# kubectl apply -f cluster.yaml 创建完成可以查看pod的状态

[root@k8s-master01 ~]# kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-5g28z 2/2 Running 0 62m

csi-cephfsplugin-6qdzp 2/2 Running 0 62m

csi-cephfsplugin-7fkpb 2/2 Running 0 62m

csi-cephfsplugin-h6jmn 2/2 Running 0 62m

csi-cephfsplugin-provisioner-cc7987fb7-52mgq 5/5 Running 0 62m

csi-cephfsplugin-provisioner-cc7987fb7-mhsjs 5/5 Running 0 62m

csi-cephfsplugin-qv4cx 2/2 Running 0 62m

csi-rbdplugin-552l5 2/2 Running 0 62m

csi-rbdplugin-hs79b 2/2 Running 0 62m

csi-rbdplugin-jrfd5 2/2 Running 0 62m

csi-rbdplugin-n6kkx 2/2 Running 0 62m

csi-rbdplugin-provisioner-85c667b47b-tjl5s 5/5 Running 0 62m

csi-rbdplugin-provisioner-85c667b47b-xrbbz 5/5 Running 0 62m

csi-rbdplugin-qk6ln 2/2 Running 0 62m

rook-ceph-crashcollector-k8s-node02-7fcbff9677-qbghz 1/1 Running 0 61m

rook-ceph-crashcollector-k8s-node03-849b95bd-b55pq 1/1 Running 0 61m

rook-ceph-crashcollector-k8s-node04-6b759cd7dc-tlq2v 1/1 Running 0 61m

rook-ceph-exporter-k8s-node02-6ddc75ddb8-7fm7d 1/1 Running 0 61m

rook-ceph-exporter-k8s-node03-585c554f77-2ggt2 1/1 Running 0 61m

rook-ceph-exporter-k8s-node04-6898d69454-lncs2 1/1 Running 0 61m

rook-ceph-mgr-a-55bcdd48bd-zf8mr 3/3 Running 0 61m

rook-ceph-mgr-b-65444cc96f-bwsh5 3/3 Running 0 61m

rook-ceph-mon-a-66fcf4fdf7-242sp 2/2 Running 0 62m

rook-ceph-mon-b-55f9d8cf8-tcq7r 2/2 Running 0 61m

rook-ceph-mon-c-7d8c78b9dd-tq6c5 2/2 Running 0 61m

rook-ceph-operator-7c566fbc4f-vkn29 1/1 Running 0 62m

rook-ceph-osd-prepare-k8s-node01-dsnb9 0/1 Completed 0 59m

rook-ceph-osd-prepare-k8s-node02-jxpxv 0/1 Completed 0 59m

rook-ceph-osd-prepare-k8s-node03-mzgdd 0/1 Completed 0 59m

rook-discover-bgt9d 1/1 Running 0 62m

rook-discover-gjxvl 1/1 Running 0 62m

rook-discover-kvmff 1/1 Running 0 62m

rook-discover-rjfh7 1/1 Running 0 62m

rook-discover-t4z4s 1/1 Running 0 62mosd-x 的容器必须存在且状态正常则集群安装成功过

更多配置:https://rook.io/docs/rook/v1.6/ceph-cluster-crd.html

2.3 安装ceph snapshot 控制器

k8s 1.19 版本以上需要单独安装 snapshot 控制器,才能完成 pvc 的快照功能,所以在此提前安装下,如果是 1.19 以下版本,不需要单独安装

snapshot 控制器的部署在集群安装时的 k8s-ha-install 项目中,

# 切换分支,根据自己k8s版本安装

cd /root/k8s-ha-install/

git checkout manual-installation-v1.20.x创建snapshot controller

[root@k8s-master01 ~]# kubectl create -f snapshotter/ -n kube-system

[root@k8s-master01 ~]# kubectl get pods -n kube-system -l app=snapshot-controller

NAME READY STATUS RESTARTS AGE

snapshot-controller-0 1/1 Running 1 (17h ago) 21h三、安装ceph客户端工具

[root@k8s-master01 examples]# pwd

/root/yaml/rook/rook/deploy/examples

[root@k8s-master01 examples]# kubectl create -f toolbox.yaml -n rook-ceph 安装完成之后查看pod状态

[root@k8s-master01 examples]# kubectl get pods -n rook-ceph -l app=rook-ceph-tools

NAME READY STATUS RESTARTS AGE

rook-ceph-tools-5b54d6cd97-nr9xt 1/1 Running 0 12s[root@k8s-master01 examples]# kubectl exec -it rook-ceph-tools-5b54d6cd97-w84mm bash -n rook-ceph

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-4.4$

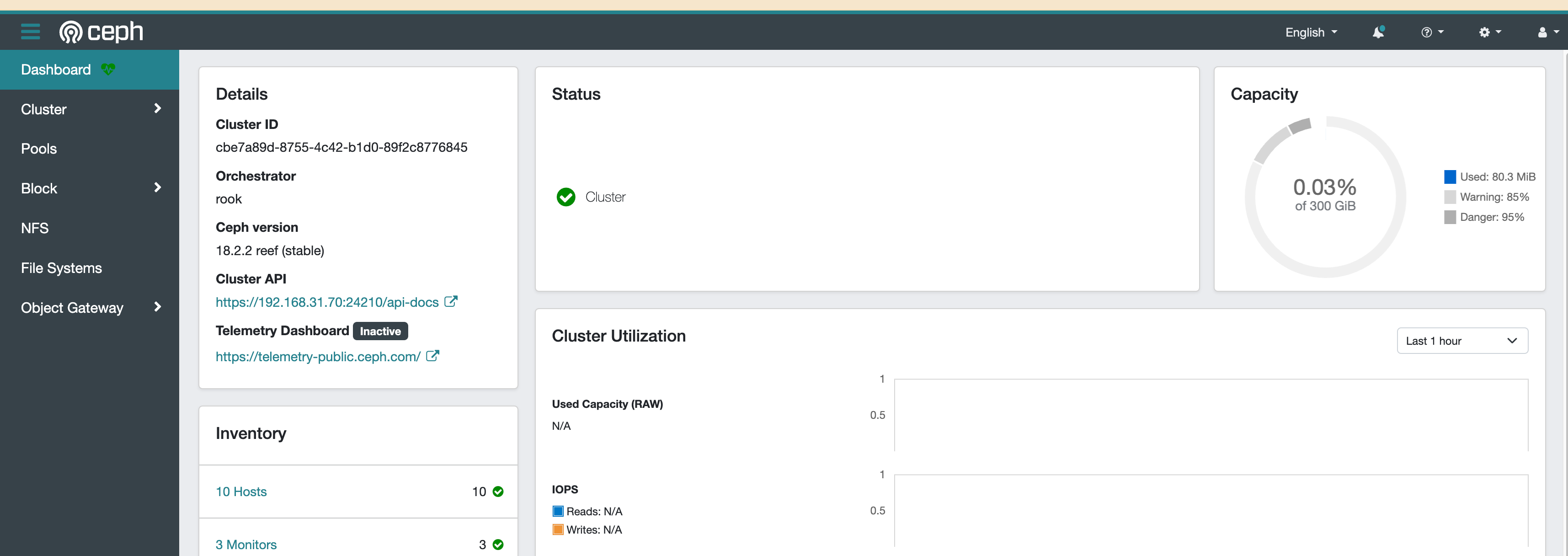

bash-4.4$ ceph status

cluster:

id: cbe7a89d-8755-4c42-b1d0-89f2c8776845

health: HEALTH_WARN

clock skew detected on mon.b, mon.c

services:

mon: 3 daemons, quorum a,b,c (age 3m)

mgr: a(active, since 74s), standbys: b

osd: 3 osds: 3 up (since 2m), 3 in (since 3m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 80 MiB used, 300 GiB / 300 GiB avail

pgs: 1 active+clean

bash-4.4$

bash-4.4$ ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 k8s-node02 26.7M 99.9G 0 0 0 0 exists,up

1 k8s-node01 26.7M 99.9G 0 0 0 0 exists,up

2 k8s-node03 26.7M 99.9G 0 0 0 0 exists,up

bash-4.4$ ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 300 GiB 300 GiB 80 MiB 80 MiB 0.03

TOTAL 300 GiB 300 GiB 80 MiB 80 MiB 0.03

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

.mgr 1 1 449 KiB 2 1.3 MiB 0 95 GiB四、Ceph Dashboard

[root@k8s-master01 examples]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-exporter ClusterIP 10.96.41.29 <none> 9926/TCP 5m17s

rook-ceph-mgr ClusterIP 10.96.76.115 <none> 9283/TCP 4m58s

rook-ceph-mgr-dashboard ClusterIP 10.96.163.161 <none> 8443/TCP 4m58s

rook-ceph-mon-a ClusterIP 10.96.233.150 <none> 6789/TCP,3300/TCP 6m10s

rook-ceph-mon-b ClusterIP 10.96.236.149 <none> 6789/TCP,3300/TCP 5m43s

rook-ceph-mon-c ClusterIP 10.96.6.244 <none> 6789/TCP,3300/TCP 5m33s默认ceph dashboard是打开的,但是类型是clusterIP,可以建一个nodeport类型的service暴露出来

[root@k8s-master01 examples]# kubectl get svc -n rook-ceph rook-ceph-mgr-dashboard -o yaml > dashboard-test.yaml

# 把导出的文件该删除的删除,更换service的名字和type

[root@k8s-master01 examples]# vim dashboard-test.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: rook-ceph-mgr

rook_cluster: rook-ceph

name: rook-ceph-mgr-dashboard-np

namespace: rook-ceph

spec:

ports:

- name: https-dashboard

port: 8443

protocol: TCP

targetPort: 8443

selector:

app: rook-ceph-mgr

mgr_role: active

rook_cluster: rook-ceph

type: NodePort

创建之后查看svc,就可以使用任意一台节点+nodeport端口号访问,如:https://192.168.31.70:24210/#/login?returnUrl=%2Fdashboard

[root@k8s-master01 examples]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-exporter ClusterIP 10.96.41.29 <none> 9926/TCP 8m56s

rook-ceph-mgr ClusterIP 10.96.76.115 <none> 9283/TCP 8m37s

rook-ceph-mgr-dashboard ClusterIP 10.96.163.161 <none> 8443/TCP 8m37s

rook-ceph-mgr-dashboard-np NodePort 10.96.164.239 <none> 8443:24210/TCP 81s

rook-ceph-mon-a ClusterIP 10.96.233.150 <none> 6789/TCP,3300/TCP 9m49s

rook-ceph-mon-b ClusterIP 10.96.236.149 <none> 6789/TCP,3300/TCP 9m22s

rook-ceph-mon-c ClusterIP 10.96.6.244 <none> 6789/TCP,3300/TCP 9m12s

dashboard的密码存放在secret中

[root@k8s-master01 examples]# kubectl get secret -n rook-ceph rook-ceph-dashboard-password -o jsonpath={'.data.password'} |base64 --decode

+1vafV"-w"(,_BGvq](l[root@k8s-master01 examples]# 登录到dashboard 后主页可能会显示MON_CLOCK_SKEW: clock skew detected on mon.b, mon.c时钟误差问题 ,解决办法:

- 使用ntp同步时间

- 修改

configmap中的mon clock drift allowed默认参数

[root@k8s-master01 examples]# kubectl get configmaps -n rook-ceph rook-config-override

NAME DATA AGE

rook-config-override 1 14m

[root@k8s-master01 examples]# kubectl edit configmaps -n rook-ceph rook-config-override

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

config: |

[global]

mon clock drift allowed = 1

kind: ConfigMap

metadata:

creationTimestamp: "2024-06-26T03:09:36Z"

name: rook-config-override

namespace: rook-ceph

ownerReferences:

- apiVersion: ceph.rook.io/v1

blockOwnerDeletion: true

controller: true

kind: CephCluster

name: rook-ceph

uid: 5ddfcfb5-48db-4fd9-92c3-ece3af796125

resourceVersion: "2068535"

uid: e9b35b14-108c-412a-b84b-3fec3529057c添加了以下内容:

config: |

[global]

mon clock drift allowed = 1此处的时间可随自身的时间差设置,在0.5到1s之间,不建议设置过大的值,修改过后删除mon pod 使其载入新的配置

[root@k8s-master01 examples]# kubectl delete pods -n rook-ceph rook-ceph-mon-a-6c6b5cdf78-wbv4r rook-ceph-mon-b-df4ffdc4c-hbf6t rook-ceph-mon-c-5c66c69c94-rw446

pod "rook-ceph-mon-a-6c6b5cdf78-wbv4r" deleted

pod "rook-ceph-mon-b-df4ffdc4c-hbf6t" deleted

pod "rook-ceph-mon-c-5c66c69c94-rw446" deleted查看状态已恢复健康值

五、ceph块存储的使用

5.1 创建StorageClass和Ceph的存储池

块存储一般用于一个pod挂载一块存储使用,相当于一个服务器新挂了一个盘,只给一个应用使用。

size生产环境最少为3个,并且要小于等于osd的数量

[root@k8s-master01 rbd]# pwd

/root/yaml/rook/rook/deploy/examples/csi/rbd

[root@k8s-master01 rbd]# vim storageclass.yaml

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph # namespace:cluster

spec:

failureDomain: host

replicated:

size: 3

# Disallow setting pool with replica 1, this could lead to data loss without recovery.

# Make sure you're *ABSOLUTELY CERTAIN* that is what you want

requireSafeReplicaSize: true

# gives a hint (%) to Ceph in terms of expected consumption of the total cluster capacity of a given pool

# for more info: https://docs.ceph.com/docs/master/rados/operations/placement-groups/#specifying-expected-pool-size

#targetSizeRatio: .5

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: rook-ceph.rbd.csi.ceph.com # csi-provisioner-name

parameters:

# clusterID is the namespace where the rook cluster is running

# If you change this namespace, also change the namespace below where the secret namespaces are defined

clusterID: rook-ceph # namespace:cluster

# If you want to use erasure coded pool with RBD, you need to create

# two pools. one erasure coded and one replicated.

# You need to specify the replicated pool here in the `pool` parameter, it is

# used for the metadata of the images.

# The erasure coded pool must be set as the `dataPool` parameter below.

#dataPool: ec-data-pool

pool: replicapool

# (optional) mapOptions is a comma-separated list of map options.

# For krbd options refer

# https://docs.ceph.com/docs/master/man/8/rbd/#kernel-rbd-krbd-options

# For nbd options refer

# https://docs.ceph.com/docs/master/man/8/rbd-nbd/#options

# mapOptions: lock_on_read,queue_depth=1024

# (optional) unmapOptions is a comma-separated list of unmap options.

# For krbd options refer

# https://docs.ceph.com/docs/master/man/8/rbd/#kernel-rbd-krbd-options

# For nbd options refer

# https://docs.ceph.com/docs/master/man/8/rbd-nbd/#options

# unmapOptions: force

# (optional) Set it to true to encrypt each volume with encryption keys

# from a key management system (KMS)

# encrypted: "true"

# (optional) Use external key management system (KMS) for encryption key by

# specifying a unique ID matching a KMS ConfigMap. The ID is only used for

# correlation to configmap entry.

# encryptionKMSID: <kms-config-id>

# RBD image format. Defaults to "2".

imageFormat: "2"

# RBD image features

# Available for imageFormat: "2". Older releases of CSI RBD

# support only the `layering` feature. The Linux kernel (KRBD) supports the

# full complement of features as of 5.4

# `layering` alone corresponds to Ceph's bitfield value of "2" ;

# `layering` + `fast-diff` + `object-map` + `deep-flatten` + `exclusive-lock` together

# correspond to Ceph's OR'd bitfield value of "63". Here we use

# a symbolic, comma-separated format:

# For 5.4 or later kernels:

#imageFeatures: layering,fast-diff,object-map,deep-flatten,exclusive-lock

# For 5.3 or earlier kernels:

imageFeatures: layering

# The secrets contain Ceph admin credentials. These are generated automatically by the operator

# in the same namespace as the cluster.

csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph # namespace:cluster

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph # namespace:cluster

csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # namespace:cluster

# Specify the filesystem type of the volume. If not specified, csi-provisioner

# will set default as `ext4`. Note that `xfs` is not recommended due to potential deadlock

# in hyperconverged settings where the volume is mounted on the same node as the osds.

csi.storage.k8s.io/fstype: ext4

# uncomment the following to use rbd-nbd as mounter on supported nodes

# **IMPORTANT**: CephCSI v3.4.0 onwards a volume healer functionality is added to reattach

# the PVC to application pod if nodeplugin pod restart.

# Its still in Alpha support. Therefore, this option is not recommended for production use.

#mounter: rbd-nbd

allowVolumeExpansion: true # 是否允许扩容

reclaimPolicy: Delete # 回收策略创建storageclass和存储池

[root@k8s-master01 rbd]# kubectl apply -f storageclass.yaml

cephblockpool.ceph.rook.io/replicapool created

storageclass.storage.k8s.io/rook-ceph-block created[root@k8s-master01 rbd]# kubectl get cephblockpool -n rook-ceph

NAME PHASE TYPE FAILUREDOMAIN AGE

replicapool Ready Replicated host 22s

[root@k8s-master01 rbd]#

[root@k8s-master01 rbd]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

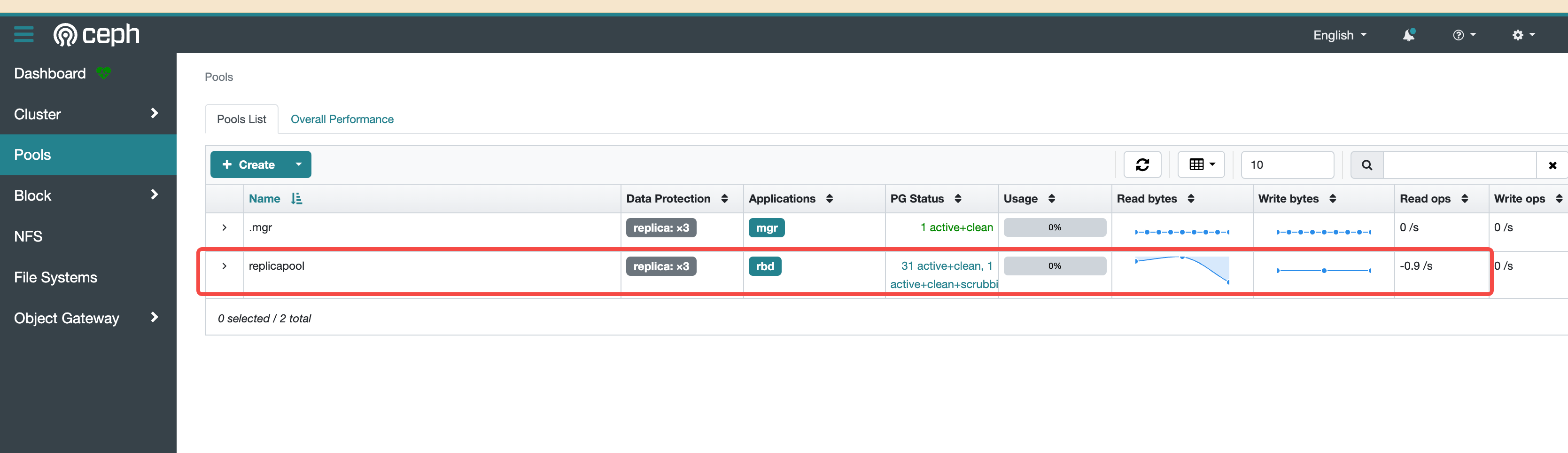

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 27s此时可以在 ceph dashboard 查看到改 Pool,如果没有显示说明没有创建成功

5.2 挂载测试

创建一个mysql服务测试

[root@k8s-master01 examples]# pwd

/root/yaml/rook/rook/deploy/examples

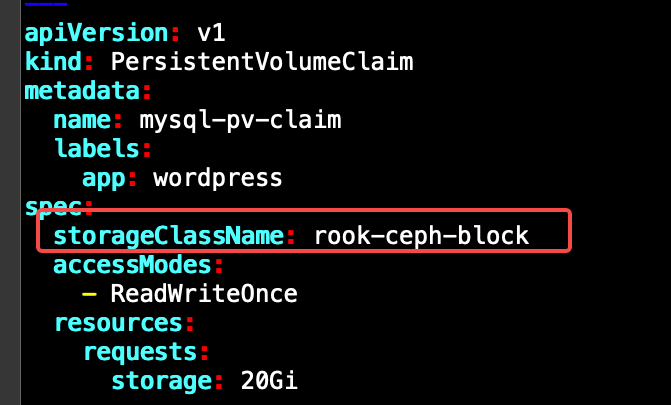

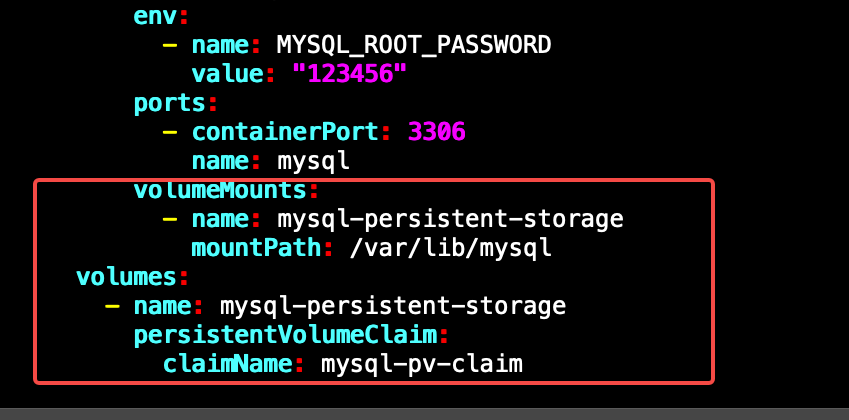

[root@k8s-master01 examples]# kubectl apply -f mysql.yaml

service/wordpress-mysql created

persistentvolumeclaim/mysql-pv-claim created

deployment.apps/wordpress-mysql created

这里会连接到刚才创建的storageclass,然后动态创建pv,然后连接到ceph创建对应的存储,之后创建pvc只需要指定storageClassName为刚才创建的storageclass名称就可以连接到rook的ceph。如果是statefulset,只需要将volumeTemplateClaim里面的Claim名称改为对应的storageClass名称就可以动态创建pod。

mysql deployment的volumes挂载了该pvc

因为mysql的数据不能多个实例连接一个存储,所以一般只能用块存储。相当于新加了一块盘给mysql使用

[root@k8s-master01 examples]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-1a205d15-3d0b-441f-9811-31e974aff4aa 20Gi RWO Delete Bound default/mysql-pv-claim rook-ceph-block 4m21s

[root@k8s-master01 examples]#

[root@k8s-master01 examples]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

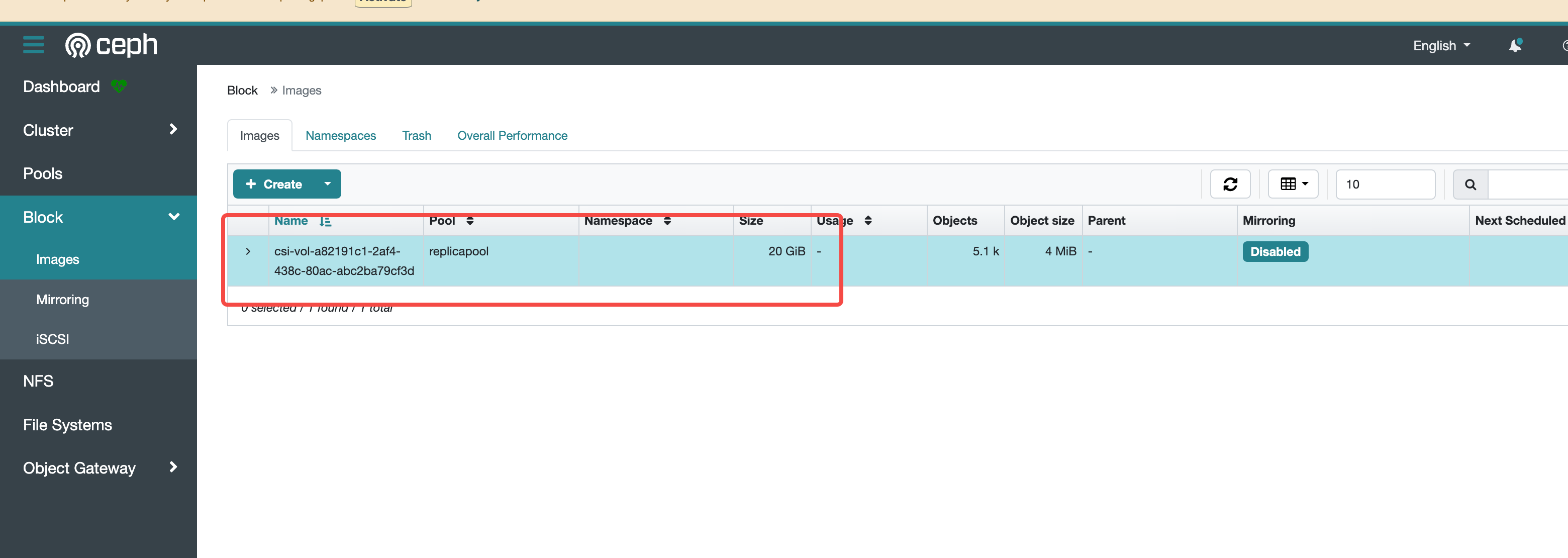

mysql-pv-claim Bound pvc-1a205d15-3d0b-441f-9811-31e974aff4aa 20Gi RWO rook-ceph-block 4m24s可以看到通过storageclass动态创建了对应的pv,此时在dashboard上可以看到对应的image

此时每个副本的存储都是独立的

下面是statefulset的演示

只需要在statefulset中添加volumeClaimTemplates,并指定storageclass为刚才创建的名称就可以自动生成pv和pvc

[root@k8s-master01 examples]# cat statefu.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx # has to match .spec.template.metadata.labels

serviceName: "nginx"

replicas: 3 # by default is 1

template:

metadata:

labels:

app: nginx # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: web

image: harbor.dujie.com/dujie/nginx:1.15.12

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "rook-ceph-block"

resources:

requests:

storage: 20Gi六、共享文件系统使用

共享文件系统一般用于多个pod共享一个存储

6.1 创建共享类型的文件系统

[root@k8s-master01 examples]# pwd

/root/yaml/rook/rook/deploy/examples

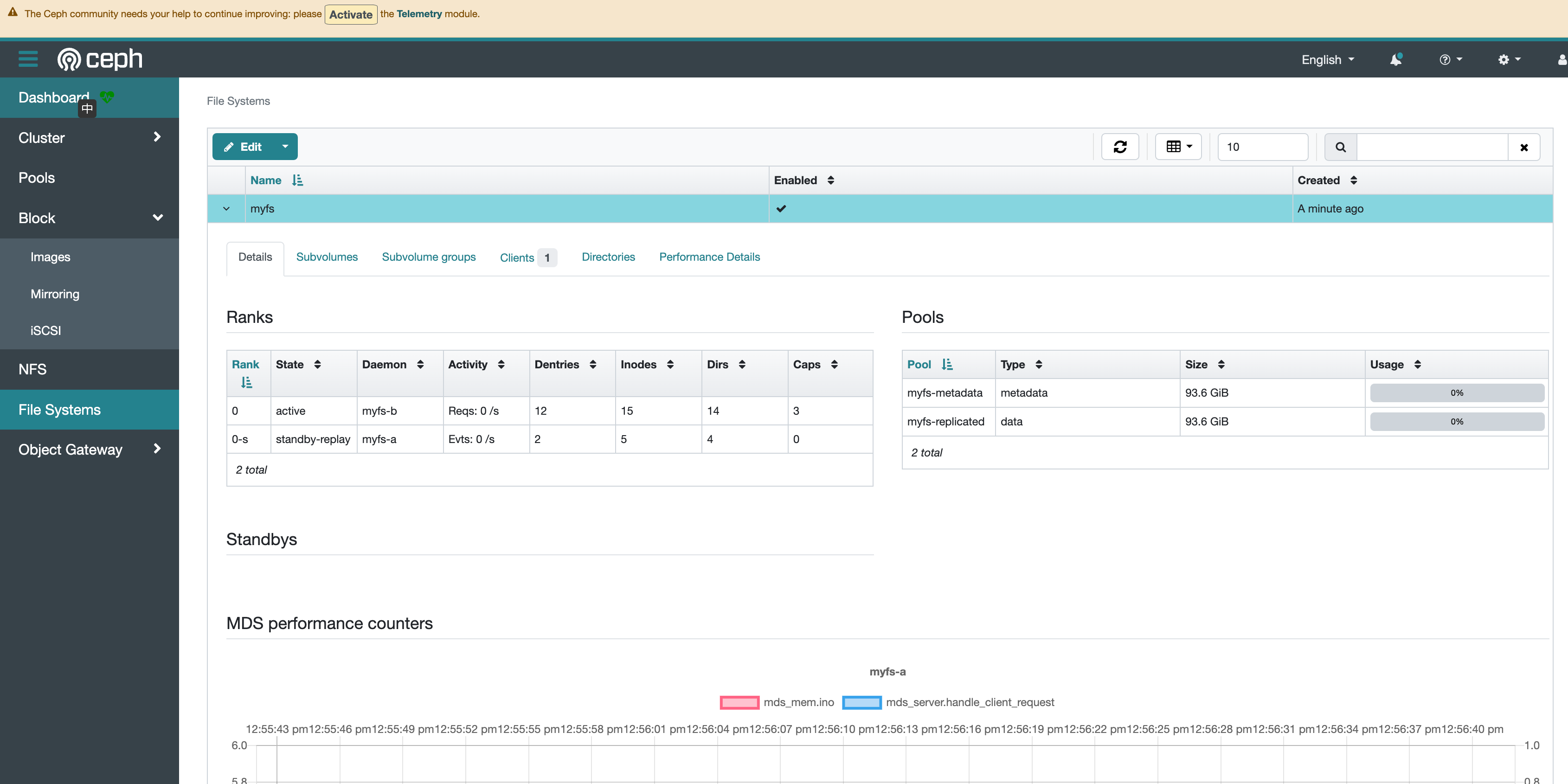

[root@k8s-master01 examples]# kubectl apply -f filesystem.yaml

cephfilesystem.ceph.rook.io/myfs created

cephfilesystemsubvolumegroup.ceph.rook.io/myfs-csi created创建完成会启动mds容器,需要等待启动后才可进行创建pv

[root@k8s-master01 examples]# kubectl get pod -n rook-ceph -l app=rook-ceph-mds

NAME READY STATUS RESTARTS AGE

rook-ceph-mds-myfs-a-5d4fb7bdd5-4n2tg 2/2 Running 0 59s

rook-ceph-mds-myfs-b-6774796659-wn8qd 2/2 Running 0 57s

6.2 创建共享类型文件系统的StorageClass

[root@k8s-master01 cephfs]# pwd

/root/yaml/rook/rook/deploy/examples/csi/cephfs

[root@k8s-master01 cephfs]# kubectl apply -f storageclass.yaml

storageclass.storage.k8s.io/rook-cephfs configured之后将pvc的StorageClassName设置成root-cephfs 即可创建共享文件类型的存储,类似与NFS,可以给多个Pod共享数据。

[root@k8s-master01 cephfs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 99m

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 21m6.3 挂载测试

[root@k8s-master01 cephfs]# cat fstest.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cephfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 15Gi

storageClassName: rook-cephfs

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "2"

labels:

app: fstest

name: fstest

namespace: default

spec:

progressDeadlineSeconds: 600

replicas: 5

revisionHistoryLimit: 10

selector:

matchLabels:

app: fstest

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: fstest

spec:

containers:

- image: harbor.dujie.com/dujie/nginx:1.15.12

imagePullPolicy: IfNotPresent

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- name: fstest-volume

mountPath: /data/test

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: registry-secret

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: fstest-volume

persistentVolumeClaim:

claimName: cephfs-pvc

readOnly: false

[root@k8s-master01 cephfs]# kubectl apply -f fstest.yaml

[root@k8s-master01 cephfs]# kubectl get pods

NAME READY STATUS RESTARTS AGE

fstest-544bbcb957-4dcfj 1/1 Running 0 2m26s

fstest-544bbcb957-6fztb 1/1 Running 0 2m26s

fstest-544bbcb957-l5b7l 1/1 Running 0 2m26s

fstest-544bbcb957-tsjtb 1/1 Running 0 2m26s

fstest-544bbcb957-wfqq4 1/1 Running 0 2m26s可以进入第一个pod查看挂载目录是否存在,并在挂载目录中创建一个文件,然后再到另一个pod中看看能不能看到刚才创建的文件

[root@k8s-master01 cephfs]# kubectl exec -it fstest-544bbcb957-4dcfj bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@fstest-544bbcb957-4dcfj:/#

root@fstest-544bbcb957-4dcfj:/# ls /data/

test

root@fstest-544bbcb957-4dcfj:/# touch /data/test/hahah.txt

root@fstest-544bbcb957-4dcfj:/# ls /data/test/

hahah.txt

root@fstest-544bbcb957-4dcfj:/# exit

exit

[root@k8s-master01 cephfs]# kubectl exec -it fstest-544bbcb957-wfqq4 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@fstest-544bbcb957-wfqq4:/# ls /data/test/

hahah.txt

root@fstest-544bbcb957-wfqq4:/# exit

exit注意 claimName 为 pvc 的名称。

此时一共创建了5个 Pod,这5个 Pod 共用了一个存储,挂载到了/data/test,该目录三个容器共享数据。

七、PVC扩容

文件共享类型的 PVC 扩容需要 k8s 1.15+

块存储类型的 PVC 扩容需要 k8s 1.16+

PVC 扩容需要开启 ExpandCSIVolumes,新版本的 k8s 已经默认打开了这个功能,可以

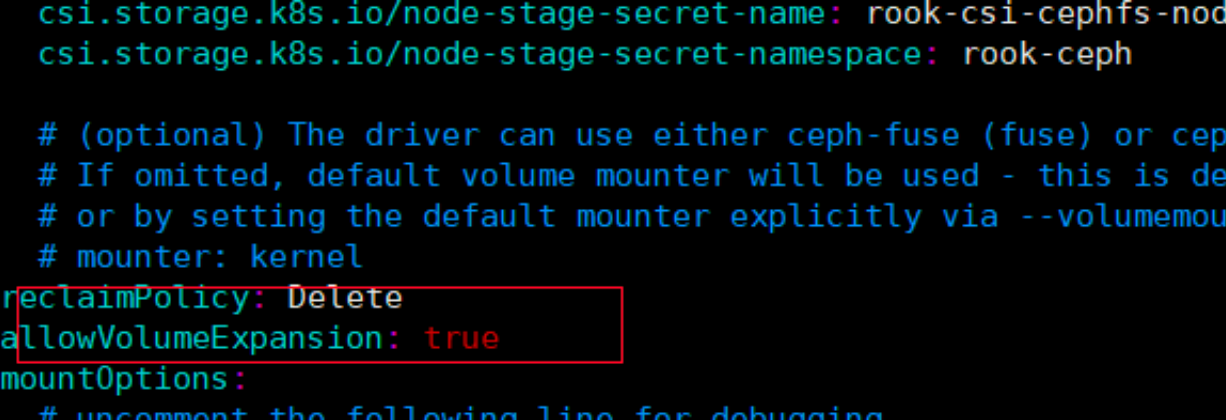

7.1 扩容文件共享型PVC

找到刚才创建文件共享型StorageClass,将allowVolumeExpansion设置为true(新版默认为true,如果不为true更改后执行kubectl apply即可)

修改一个已存在的pvc

[root@k8s-master01 cephfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-65327259-0add-4d22-a178-9021a4287442 15Gi RWX rook-cephfs 10m

mysql-pv-claim Bound pvc-1a205d15-3d0b-441f-9811-31e974aff4aa 20Gi RWO rook-ceph-block 104m

www-web-0 Bound pvc-017ff95d-4c9b-4502-bfba-67c407a959c9 20Gi RWO rook-ceph-block 97m

www-web-1 Bound pvc-84edb236-2940-4297-86f9-b0a9ea9b9390 20Gi RWO rook-ceph-block 97m

www-web-2 Bound pvc-a2740af1-065d-44fa-b2e6-9f407c306b9b 20Gi RWO rook-ceph-block 92m

[root@k8s-master01 cephfs]#

[root@k8s-master01 cephfs]# kubectl edit pvc cephfs-pvc

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{},"name":"cephfs-pvc","namespace":"default"},"spec":{"accessModes":["ReadWriteMany"],"r

esources":{"requests":{"storage":"15Gi"}},"storageClassName":"rook-cephfs"}}

pv.kubernetes.io/bind-completed: "yes"

pv.kubernetes.io/bound-by-controller: "yes"

volume.beta.kubernetes.io/storage-provisioner: rook-ceph.cephfs.csi.ceph.com

volume.kubernetes.io/storage-provisioner: rook-ceph.cephfs.csi.ceph.com

creationTimestamp: "2024-06-26T05:12:23Z"

finalizers:

- kubernetes.io/pvc-protection

name: cephfs-pvc

namespace: default

resourceVersion: "2095326"

uid: 65327259-0add-4d22-a178-9021a4287442

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

storageClassName: rook-cephfs

volumeMode: Filesystem

volumeName: pvc-65327259-0add-4d22-a178-9021a4287442

status:

accessModes:

- ReadWriteMany

capacity:

storage: 15Gi

phase: Bound

"/tmp/kubectl-edit-2357678919.yaml" 36L, 1315C written

persistentvolumeclaim/cephfs-pvc edited

[root@k8s-master01 cephfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-65327259-0add-4d22-a178-9021a4287442 25Gi RWX rook-cephfs 11m八、PVC快照

pvc快照功能需要k8s 1.17

8.1 块存储快照

8.1.1 创建snapshotClass

[root@k8s-master01 rbd]# pwd

/root/yaml/rook/rook/deploy/examples/csi/rbd

[root@k8s-master01 rbd]# kubectl apply -f snapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-rbdplugin-snapclass created8.1.2 创建快照

首先在之前创建的web容器中创建一个文件夹并创建一个文件

[root@k8s-master01 rbd]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 101m

web-1 1/1 Running 0 101m

web-2 1/1 Running 0 101m

[root@k8s-master01 rbd]# kubectl exec -it web-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@web-0:/#

root@web-0:/# cd /usr/share/nginx/html

root@web-0:/usr/share/nginx/html# touch haha.txt

root@web-0:/usr/share/nginx/html# mkdir mltest

root@web-0:/usr/share/nginx/html#

root@web-0:/usr/share/nginx/html# ls

haha.txt lost+found mltest

root@web-0:/usr/share/nginx/html# ls -l

total 20

-rw-r--r-- 1 root root 0 Jun 26 05:41 haha.txt

drwx------ 2 root root 16384 Jun 26 03:45 lost+found

drwxr-xr-x 2 root root 4096 Jun 26 05:41 mltest修改source,persistentVolumeClaimName 为需要做快照的pvc

[root@k8s-master01 rbd]# pwd

/root/yaml/rook/rook/deploy/examples/csi/rbd

[root@k8s-master01 rbd]#

[root@k8s-master01 rbd]# cat snapshot.yaml

---

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: rbd-pvc-snapshot

spec:

volumeSnapshotClassName: csi-rbdplugin-snapclass

source:

persistentVolumeClaimName: www-web-0

[root@k8s-master01 rbd]# kubectl apply -f snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/rbd-pvc-snapshot created

[root@k8s-master01 rbd]# kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

rbd-pvc-snapshot true www-web-0 20Gi csi-rbdplugin-snapclass snapcontent-53ec9a13-354f-4dcd-9a5d-9dfa13ed4430 10s 10s

[root@k8s-master01 rbd]# kubectl get volumesnapshotclasses.snapshot.storage.k8s.io

NAME DRIVER DELETIONPOLICY AGE

csi-rbdplugin-snapclass rook-ceph.rbd.csi.ceph.com Delete 14m8.1.3 指定快照创建pvc

如果想要创建一个具有某个数据的pvc,可以从某个快照恢复

[root@k8s-master01 rbd]# pwd

/root/yaml/rook/rook/deploy/examples/csi/rbd

[root@k8s-master01 rbd]# cat pvc-restore.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-restore

spec:

storageClassName: rook-ceph-block

dataSource:

name: rbd-pvc-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 21Gi注意:dataSource为快照名,storageClassName为新建的pvc的storageClass,storage的大小不能低于原pvc的大小

[root@k8s-master01 rbd]# kubectl apply -f pvc-restore.yaml

persistentvolumeclaim/rbd-pvc-restore created

[root@k8s-master01 rbd]#

[root@k8s-master01 rbd]#

[root@k8s-master01 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-65327259-0add-4d22-a178-9021a4287442 25Gi RWX rook-cephfs 44m

mysql-pv-claim Bound pvc-1a205d15-3d0b-441f-9811-31e974aff4aa 20Gi RWO rook-ceph-block 139m

rbd-pvc-restore Bound pvc-38c4048f-b799-4e73-9678-73f261f5d882 21Gi RWO rook-ceph-block 6s

www-web-0 Bound pvc-017ff95d-4c9b-4502-bfba-67c407a959c9 20Gi RWO rook-ceph-block 131m

www-web-1 Bound pvc-84edb236-2940-4297-86f9-b0a9ea9b9390 20Gi RWO rook-ceph-block 131m

www-web-2 Bound pvc-a2740af1-065d-44fa-b2e6-9f407c306b9b 20Gi RWO rook-ceph-block 127m8.1.4 数据校验

创建一个容器,挂载该pvc,查看是否含有创建的目录和文件

[root@k8s-master01 rbd]# cat pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: csirbd-demo-pod

spec:

containers:

- name: web-server

image: registry.cn-hangzhou.aliyuncs.com/dyclouds/nginx:1.15.12

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc-restore

readOnly: false

[root@k8s-master01 rbd]# kubectl apply -f pod.yaml

pod/csirbd-demo-pod created

# 可以看到该容器有之前创建的目录和文件了

[root@k8s-master01 rbd]# kubectl get pods

NAME READY STATUS RESTARTS AGE

csirbd-demo-pod 1/1 Running 0 9m10s

fstest-544bbcb957-4dcfj 1/1 Running 0 56m

fstest-544bbcb957-6fztb 1/1 Running 0 56m

fstest-544bbcb957-l5b7l 1/1 Running 0 56m

fstest-544bbcb957-tsjtb 1/1 Running 0 56m

fstest-544bbcb957-wfqq4 1/1 Running 0 56m

web-0 1/1 Running 0 127m

web-1 1/1 Running 0 126m

web-2 1/1 Running 0 126m

wordpress-mysql-59d6d7c875-s9n2g 1/1 Running 0 150m

[root@k8s-master01 rbd]# kubectl exec -it csirbd-demo-pod bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@csirbd-demo-pod:/# ls /var/lib/www/html

haha.txt lost+found mltest九、PVC克隆

[root@k8s-master01 rbd]# pwd

/root/yaml/rook/rook/deploy/examples/csi/rbd

[root@k8s-master01 rbd]# cat pvc-clone.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-clone

spec:

storageClassName: rook-ceph-block

dataSource:

name: www-web-0

kind: PersistentVolumeClaim

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 21Gi

[root@k8s-master01 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-65327259-0add-4d22-a178-9021a4287442 25Gi RWX rook-cephfs 67m

mysql-pv-claim Bound pvc-1a205d15-3d0b-441f-9811-31e974aff4aa 20Gi RWO rook-ceph-block 162m

rbd-pvc-clone Bound pvc-c5f7d985-8582-41cc-94d6-3c9fc5c2987f 21Gi RWO rook-ceph-block 2s

rbd-pvc-restore Bound pvc-38c4048f-b799-4e73-9678-73f261f5d882 21Gi RWO rook-ceph-block 23m

www-web-0 Bound pvc-017ff95d-4c9b-4502-bfba-67c407a959c9 20Gi RWO rook-ceph-block 155m

www-web-1 Bound pvc-84edb236-2940-4297-86f9-b0a9ea9b9390 20Gi RWO rook-ceph-block 155m

www-web-2 Bound pvc-a2740af1-065d-44fa-b2e6-9f407c306b9b 20Gi RWO rook-ceph-block 150m需要注意的是 pvc-clone.yaml 的 dataSource 的 name 是被克隆的 pvc 名称,在此是 www-web-0,storageClassName 为新建 pvc 的 storageClass 名称,storage 不能小于之前 pvc 的大小。